Background

The Docker Hub

When you deal with containers, Docker Hub plays an essential role. It makes it easier to instantiate & test new environments. With a recent update on usage and pricing, Docker Hub offers reasonable limits for free users and competitive pricing for paid users considering the convenience it provides.

Are there any alternatives to Docker Hub?

There are alternate registries available, too, such as quay.io. The big cloud service providers - Amazon Web Services, Microsoft Azure, and Google Cloud - also offer Container Registries. You can self host a Container Registry too.

Why would you need to host a Container Registry yourself?

Within an enterprise, there are occasions when you would prefer to host the Docker images yourself.

- You do not wish to rely on a third party container registry for your core services.

- Due to organizational data security policies, you cannot save your images on a third party registry.

- Your servers are in a private network, and they do not have direct connectivity to the internet.

- You would like to save costs or provide better network speeds by hosting Docker images locally.

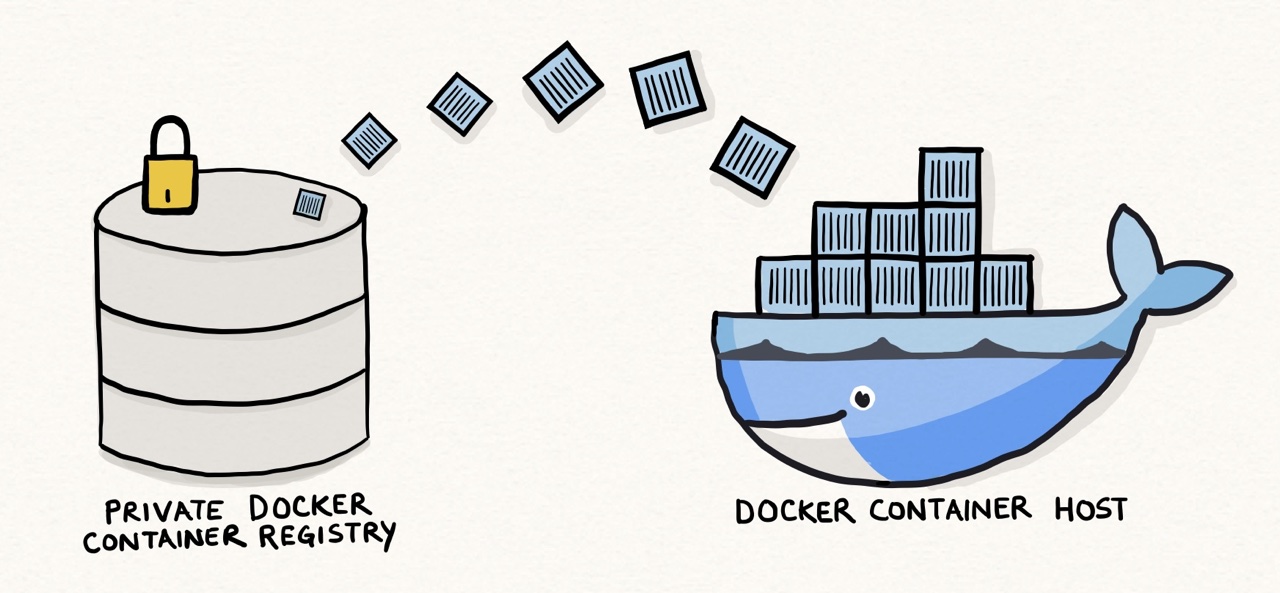

How would a Self-Hosted Container Repository work?

Docker is open-source software, and one of its utilities is the Private Registry Server. The Docker Documentation also provides useful information on how to deploy a registry server yourself

Official Docker Documentation

NOTE: The Self Hosted Docker Registry server requires

https://support (a TLS/SSL certificate).

- If you plan to run it on

http://, then please refer to Deploying a plain HTTP registry.

- You just need to add

insecure-registrieskey in the/etc/docker/daemon.jsonfile.- You will not be able to have authentication support if you use an insecure registry.

The following is a broad overview of how a private container registry server could work.

Set up a local registry server. Preferably, put it behind a reverse proxy, and restrict access either through password protection or source IP or both. I will demonstrate it.

Tag your docker images and point them to your local registry. An example command is

docker tag ubuntu:18.04 registry.yourdomain.com/ubuntu:18.04- Here the

ubuntu:18.04is the default image pulled from Docker Hub. It could be a custom image that you’ve created using thedocker buildcommand. You may also build your image from scratch by creating a base image usingdebootstrapand then making other images using your base image.

- Here the

Push that image to the local registry. An example is:

docker push registry.yourdomain.com/ubuntu:18.04Your local registry is ready. You and your audience can now pull from it. An example command is

docker login # if there is password protection docker pull registry.yourdomain.com/ubuntu:18.04 docker run -it registry.yourdomain.com/ubuntu:18.04 bash

Steps to create a Private Container Registry

The following steps are just guidance on how you can create a private container registry. You may have to alter it to suit your implementation.

Assumptions

- You have an Ubuntu/Debian based machine - you can set one up on AWS, Digital Ocean, or E2E Networks.

- A client can reach your machine through a public IP address. You may need it if you are going to set up a TLS certificate to enable

https://support. In this tutorial, the public IP address is1.2.3.4 - You have a readily available domain that’s pointing to

1.2.3.4. In this example, the domaindocker.yourdomain.comis pointing to1.2.3.4 - You already have Docker running on your machine. If you don’t have that ready, then first read the Official Documentation.

What we’ll be doing

- Start a Private Docker Container Registry instance.

- Set up free TLS certificate for

docker.yourdomain.comusing Let’s Encrypt Certificate Authority. You can use AWS or CloudFlare for your HTTPS needs instead of setting a TLS certificate yourself. - Setup a Reverse Proxy using Apache, and point

docker.yourdomain.comto the registry container using the TLS certificates. - Secure the registry.

- Test out the registry (

push&pullimages).

Let’s get started!

Hands-On

Step 1 - Start a Private Docker Container Registry instance

Create a directory for storing the data Open up the terminal and login to your server. Then create a directory.

mkdir -p /data/docker-registry- Once a user pushes an image to the registry, it will get stored in the above directory

Run the Registry Container Use the following command to start the container. This command will download the “registry:2” image from Docker Hub.

docker run -d \ --name docker-registry \ --restart always \ -p 127.0.0.1:8080:5000 \ -v /data/docker-registry:/var/lib/registry \ registry:2- Port

8080on127.0.0.1loopback address is forwarding the request to the container on Port5000(the default port for the registry). - https://hub.docker.com/_/registry has more information on Docker Registry image.

- Port

The Private Container Registry is now ready to accept requests.

Step 2 - Set up a Reverse Proxy with TLS/SSL Certificate

We need to configure a reverse proxy that will frontend the access to the Docker Registry and provide TLS (https://) support. If you are using AWS or CloudFlare, then you can already point the domain to your server and provide https:// functionality.

The following steps help you create a TLS certificate yourself using Let’s Encrypt. TLS is an upgraded version of SSL.

What’s Let’s Encrypt

Let’s Encrypt https://letsencrypt.org/ is a free, automated, and open Certificate Authority (CA). It is an alternative for the paid SSL/TLS certificates offered by service providers like GoDaddy, Comodo, Verisign etc. The relevant difference between Let’s Encrypt and other paid services is that Let’s Encrypt’s certificates have a validity of 3 months (but you can automate renewal), and as a CA, Let’s Encrypt only offers Domain Validated (DV) certificates. That is, they will authenticate only your domain.

A DV certificate ensures that the in-transit information between a client and the server is always encrypted. However, unlike an Organization Validated (OV) or Extended Validation (EV) certificate, a DV certificate will not validate the authenticity of the Organization. OV & EV Certificates are more relevant in high-trust scenarios such as Financial Institutions/Banks.

Let’s Encrypt uses the ACME protocol to verify your domain ownership. For that, there are many different clients implementations, and we are going to use one called acme.sh.

📌 The following instructions assume an Ubuntu based machine

Install acme.sh

The instructions are available at https://github.com/acmesh-official/acme.sh

Login to the server on which you’ll be having the Reverse Proxy, and run the following command.

curl https://get.acme.sh | sh

Logout and log back in.

The acme.sh command will be available.

acme.sh --version

Points to note

acme.shinstalls itself in/root/.acme.shdirectory.- It also creates an alias for your user in

~/.bashrcto initialise its environment and set the relevant paths. That’s why the Logout/Login is required for the first time. - It creates a cron entry (that you can check by typing

crontab -l) so that auto-renew of certificates is possible.

Install Apache web server and enable proxy modules

I will use Apache as the Reverse Proxy. You an use NGINX or anything else that you have an experience with.

apt get install apache2

a2enmod proxy* rewrite headers ssl

systemctl restart apache2.service

Verify whether apache is working by visiting docker.yourdomain.com in a browser. It should show a default Ubuntu page.

Issue a Let’s Encrypt certificate for your domain

acme.sh --issue --domain docker.yourdomain.com \

--server letsencrypt --webroot /var/www/html/

- Replace

docker.yourdomain.comwith your domain. - Since it is the default apache install, and the domain is pointing to

/var/www/htmlfolder,acme.shwill create a few files in/var/www/html/.well-knownfolder for Let’s Encrypt to access and thus verify the domain ownership. - The above example demonstrates a simple

--webrootmethod to issue certificates which requires a pre-installed web server.acme.sh(and Let’s Encrypt) supports many other ways including DNS based verification which doesn’t require a web server or the machine to be available. - I am using

--server letsencryptbecause as of version 3.0,acme.shuses ZeroSSL (an alternative to Let’s Encrypt) as the default CA instead of Let’s Encrypt.

Once the certificate is successfully issued, you can install it.

Install the certificate for your web server to access

Even if the certificate is issued, you still need to refer to it in your web server configuration. Use the following:

mkdir /etc/ssl/custom

acme.sh --install-cert \

--domain docker.yourdomain.com \

--cert-file /etc/ssl/custom/docker.yourdomain.com.crt \

--key-file /etc/ssl/custom/docker.yourdomain.com.key \

--fullchain-file /etc/ssl/custom/docker.yourdomain.com-chain.pem \

--reloadcmd "systemctl reload apache2.service"

- Of course, you’ll change the domain

docker.yourdomain.comto your domain. - The certificates are available in a location accessible to the web server. You can choose any other directory instead of

/etc/ssl/custom - The

reloadcmdparameter looks for a command that will execute once the certificates are saved. This ensures that when the certificates are re-issued (auto-renewed) thenapache2configuration is reloaded so that it can use the latest certificates. You can have a sequence of commands if you use the certificates in other applications too (such as mail server).

Make configuration changes in Apache

In this example, Apache is only serving the docker registry. If you have a different setup with multiple virtual hosts, then adapt the process below for your environment.

Modify the file /etc/apache2/sites-enabled/000-default.conf and replace its content with the following.

<Virtualhost *:80>

ServerName docker.yourdomain.com

Alias /.well-known/ "/var/www/html/.well-known/"

RewriteEngine on

RewriteCond %{HTTPS} !=on

RewriteRule ^/(.*) https://docker.yourdomain.com/$1 [R,L]

</VirtualHost>

<IfModule mod_ssl.c>

<VirtualHost _default_:443>

ServerAdmin webmaster@localhost

ServerName docker.yourdomain.com

DocumentRoot /var/www/html

Alias /.well-known/ "/var/www/html/.well-known/"

SSLEngine on

SSLCertificateFile /etc/ssl/custom/docker.yourdomain.com.crt

SSLCertificateKeyFile /etc/ssl/custom/docker.yourdomain.com.key

SSLCertificateChainFile /etc/ssl/custom/docker.yourdomain.com-chain.pem

Header always set "Docker-Distribution-Api-Version" "registry/2.0"

Header onsuccess set "Docker-Distribution-Api-Version" "registry/2.0"

RequestHeader set X-Forwarded-Proto "https"

ProxyPreserveHost On

ProxyPass /.well-known !

ProxyPass / http://127.0.0.1:8080/

ProxyPassReverse / http://127.0.0.1:8080/

</VirtualHost>

</IfModule>

- The

port 80is auto forwarded toport 443(https with TLS support) - The

/.well-knowndirectory is excluded from being proxied. This ensures that during the TLS certificate renewal viaacme.sh, Let’s Encrypt reaches the correct folder on the host machine (/var/www/html/.well-known) instead of the Docker registry container that’s forwarded on Port 8080. - I am using the

proxymodule to forward all requests to the Docker Registry container as configured above in Step 1

Verify the configuration, and then reload or restart apache using

apache2ctl configtest

systemctl restart apache2.service

Check if the redirection is happening properly.

- Visit

http://docker.yourdomain.comin a browser. - If everything is in order, it will auto-redirect to

https://docker.yourdomain.comand will show a pad-lock 🔒 icon too in the browser.

In case of errors, you can optionally review logs through

tail -f /var/log/syslog /var/log/apache2/*.log

Install the certificate again (OPTIONAL)

Just to ensure that the reloadcmd is working properly, re-install the certificate. If the result is successful, then your auto-renew will work without any errors.

acme.sh --install-cert \

--domain docker.yourdomain.com \

--cert-file /etc/ssl/custom/docker.yourdomain.com.crt \

--key-file /etc/ssl/custom/docker.yourdomain.com.key \

--fullchain-file /etc/ssl/custom/docker.yourdomain.com-chain.pem \

--reloadcmd "systemctl reload apache2.service"

- I just re-ran the command that I followed above

Step 3 - Push an image in the registry

This step requires an image to exist already.

You can run the docker images command and select an image you’d like to push to the repository.

For example, if you run the following:

docker pull python:3

# OR

docker run -it --rm python:3 bash

Docker will pull the python:3 image from Docker Hub and save it locally.

You can tag this image to prepare it for pushing in your local registry.

docker images

docker tag python:3 docker.yourdomain.com/python:3

- You should see the

python:3image when you rundocker imagescommand. That’s the image you’ll be tagging.

Now push it in your local registry

docker push docker.yourdomain.com/python:3

- The

docker pushcommand will upload (and compress) the image in the your private docker registry. You can verify it by visiting the directory that you mapped in Step 1, while creating the Private Registry container. - As per this example, you’ll check

/data/docker-registryon the Docker Host machine. If you have a backup schedule, it is this directory that you should be backing up.

Step 4 - Pull the image on a remote machine

Now that you have your private container registry, you can easily pull images from there.

On another machine, which doesn’t have the python:3 image already available, you can try the following

docker pull docker.yourdomain.com/python:3

#OR

docker run --rm -it docker.yourdomain.com/python:3 bash

If you don’t have an alternate machine, and you want to try it on the same machine from where you pushed that image, first, you’ve to remove it from your local machine.

docker images

docker rmi python:3

docker rmi docker.yourdomain.com/python:3

- You should make sure you do not have the image already that you’ll attempt to pull from your private container registry.

Then take a pull using

doker pull docker.yourdomain.com/python:3

docker images

It should work.

Step 5 - Securing the Private Container Registry

Enable IP based restriction or Password Protection so that only authenticated users can use the container registry.

The official Docker Documentation on authenticating proxy with apache also talks about scenarios when you’d like everyone to take a pull, but only restricted users who can push (by providing an extra parameters in apache virtual host).

Add the IP restriction related configuration in Apache

In the virtual host config /etc/apache2/sites-enabled/000-default.conf, add the following under the SSL section

<IfModule mod_ssl.c>

# ...

<Location "/">

Order deny,allow

Deny from all

Allow from 202.54.15.30 172.17.0.1

Allow from 192.168

</Location>

# ...

</IfModule>

- As you can guess,

Deny from allwould mean all requests are denied by default. Allow fromallows access only from the IPs that you specify.

Reload Apache

apache2ctl configtest

systemctl reload apache2

Alternatively, add Password Protection

In the virtual host config /etc/apache2/sites-enabled/000-default.conf, add the following under the SSL section

<IfModule mod_ssl.c>

# ...

<Location "/">

AuthUserFile /etc/apache2/htpasswd

AuthName "Authentication"

AuthType Basic

require valid-user

</Location>

# ...

</IfModule>

- Here we provide a file in which the passwords are saved. The path of the file is

/etc/apache2/htpasswd

Create a username & password too

cd /etc/apache2

htpasswd -c -m htpasswd docker

- The

-cflag will create the filehtpasswdif it doesn’t already exist, or overwrite it. 🚨 Remove the flag if you already have that file, otherwise you’ll lose all username/password information. htpasswd(the latter one) is the file namedockeris the username

Reload Apache

apache2ctl configtest

systemctl reload apache2

Use both IP Based Restriction & Password Protection

If you’d like to use BOTH the IP based restriction (allowing all your machines inside your organization), and Password based restriction (to enable occasional external access), then you can use the following in /etc/apache2/sites-enabled/000-default.conf

<IfModule mod_ssl.c>

# ...

<Location "/">

Order deny,allow

Deny from all

Allow from 202.54.15.30 172.17.0.1 192.168

Satisfy any

AuthUserFile /etc/apache2/htpasswd

AuthName "Authentication"

AuthType Basic

require valid-user

</Location>

# ...

</IfModule>

Reload Apache

apache2ctl configtest

systemctl reload apache2

Step 6 - Testing out the Login

If you’ve set password based authentication, then you need to login before you can either push to or pull from docker.yourdomain.com

The following should generate an error

docker run --rm -it docker.yourdomain.com/python:3 bash

Now login & start the container again

docker login docker.yourdomain.com

docker run --rm -it docker.yourdomain.com/python:3 bash

All done!